The Ethical and Legal Challenges of GitHub Copilot

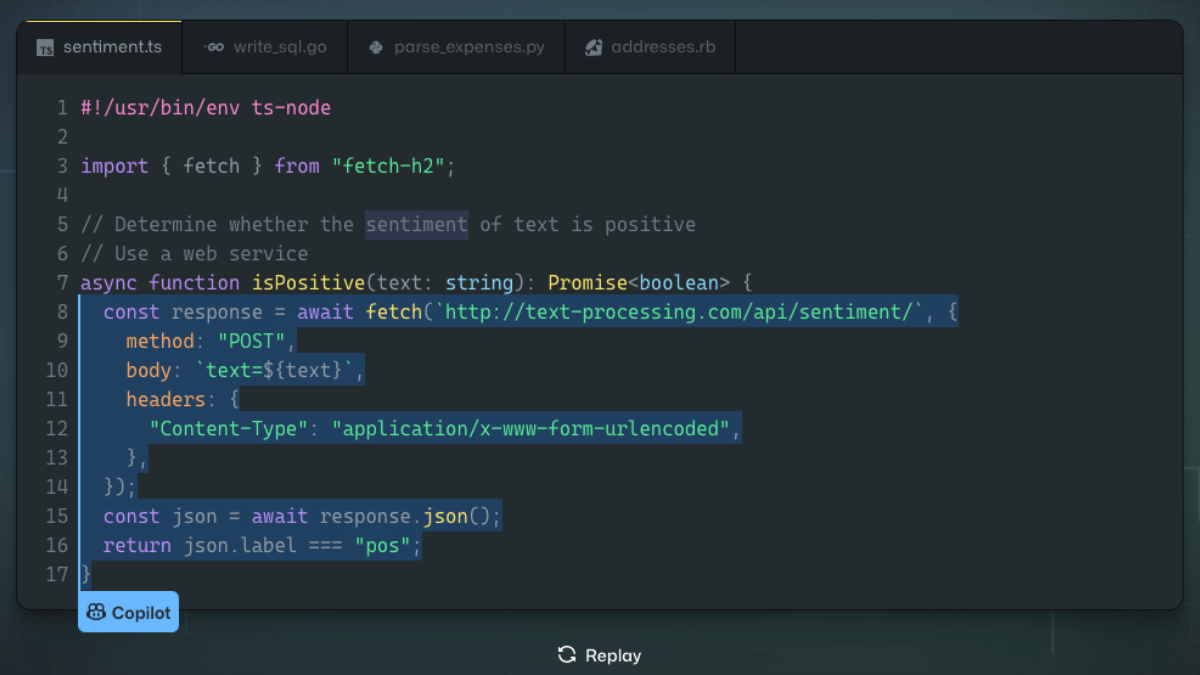

Earlier this week, developer and professor of Computer Science at Texas A&M Tim Davis took to Twitter to highlight how GitHub’s artificial intelligence coding tool, Copilot, was producing code very similar to his own, showing a side-by-side comparison of the two snippets.

According to Davis, he used the prompt “sparse matrix transpose, cs_” only to have Copilot produce code very similar to his earlier, open-source work. However, the Copilot version did not come with the LGPL license, a requirement of using code under that license, nor was there any indication that it was copied.

To make matters worse, another developer was able to replicate Davis’ findings and this came despite the fact that Davis had opted for his code to not be used in Copilot and, when giving the prompt, requested that public code be filtered out.

Alex Gravely, the principal engineer at GitHub and the inventor of Copilot, responded saying that the code is different, but admitted that it was similar. He also encouraged anyone with a solution to this problem to “patent it.”

However, the news comes as open-source programmer and lawyer Matthew Butterick announced he’s teaming up with several class action litigators to investigate the possibility of a lawsuit against GitHub, and its owner Microsoft, over Copilot’s alleged violation of open-source authors’ rights.

Unfortunately for both GitHub and Microsoft, as we discussed last year, these legal questions were visible a long way off and, in truth, were even seen the day the product launched. However, it’s now clear that they are starting to come to a head both legally and ethically.

The Legal Questions

Copilot was first announced in June 2021, where it entered a technical preview that was open to a limited group of developers. It was then made available to all users in June of this year, after almost a year in limited access.

The idea was simple enough, Copilot would use an AI system to generate code when prompted by a user. This could be anything from finishing a single line of code to writing multiple lines with one prompt.

But almost immediately, there were concerns about how the AI was trained. According to GitHub, it was trained on “billions of lines of code” in dozens of programming languages. This included code on GitHub itself, which is a common tool used for open-source developers.

In a recent analysis by Thomas Claburn at The Register, he and the experts he interviewed pointed felt that it wasn’t the ingestion and training of the AI that was likely to produce problems, instead, it was the code it produces.

According to Tyler Ochoa, a professor in the law department at Santa Clara University, “When you’re trying to output code, source code, I think you have a very high likelihood that the code that you output is going to look like one or more of the inputs because the whole point of code is to achieve something functional.”

However, that functionality could end up helping GitHub in this case. The recent Supreme Court ruling the Google v Oracle case found that, since software always has a functional component, that it is different from books, films and other “literary works”.

In that case, the Supreme Court found that Google was not infringing on Oracle’s code when it used Oracle-owned JAVA APIs in the Android operating system.

But the case may not be completely relevant to Copilot. One of the key reasons the Supreme Court cited in deciding the way that it did was that Oracle had not made any effort to enter the mobile market, thus Google’s version was not a direct competitor.

With Copilot, it is very likely that any code generated would be used for the same market. Likewise, any code generated by Copilot may have a different “purpose and character” than Google did in creating Android, setting up another challenge there.

Right now, the fair use questions around software make it difficult to predict how a court might rule. This is both due to the limited litigation in this space and the lack of clarity on this particular Supreme Court decision, especially given how fact-specific its findings were.

In short, this was a space already in chaos before the question of AI and is now even more uncertain.

Questions of Ethics

However, the legal questions may not be the only ones. There is a serious question of ethics.

GitHub made much of its name and brand due to open-source developers. Before its purchase by Microsoft, it was the leading tool for such development. Now, GitHub has launched a product that many developers feel is against the ethos of open-source development by exploiting open-source code but omitting the attribution and licensing requirements that come with it.

In an interview with Tim Anderson at Dev Class, Jeremy Soller, the principal engineer at System 76, said that it is “Illegal source code laundering, automated by GitHub.” He went on to say that it makes it possible to find the code that you want and, if it is under an incompatible license, keep probing Copilot to reproduce it.

The issue is that, as unsettled as the law is in this space, the ethics are even less settled. The response to Copilot’s use of open-source code has been, overall, negative from the community. The main fear, as Soller pointed out, is that it could enable the use of open-source code in incompatible products, including closed source ones.

However, even the exploitation of the code itself has ruffled feathers. Though GitHub users can opt out of having their code used, as Davis showed, that doesn’t necessarily mean anything when it comes to open-source code.

The reason is that open-source code, by its nature, is going to be copied and reused. So, even if the author of one project doesn’t want their work to be used in Copilot, if that code appears in another project where the option is left on, the code will still be used for training the AI.

As such, there’s no effective way for an open-source developer to opt out of the system and, given what we’ve seen, it does appear likely that, at least in a small percentage of cases, estimated to be around 1% according to Graveley, you can get longer matching snippets out of Copilot-written code.

With that in mind, it’s easy to see why open-source developers are wary of Copilot, even if they AI more broadly.

Bottom Line

What strikes me as peculiar here is just how aggressive GitHub, and through proxy Microsoft, are being here at pushing boundaries. Copilot raises difficult questions, both legally and ethically.

Between the Supreme Court decision, the backlash from the open-source community, it’s obvious that these waters are not fully tested, either from a legal or ethical perspective.

To that end, Microsoft and GitHub are making a massive play into this space, possibly emboldened by the Supreme Court’s decision. However, the backlash has already started, and it looks like the legal challenges are on the horizon.

Normally, you’d expect a company like GitHub to play it conservative in this kind of situation. However, it seems that GitHub is welcoming the challenges, both in and out of the courtroom.

What this means for GitHub and Copilot in the future is difficult to say, but it is likely that this will be the exact point where the issues of AI in programming will come to a head.

Want to Reuse or Republish this Content?

If you want to feature this article in your site, classroom or elsewhere, just let us know! We usually grant permission within 24 hours.